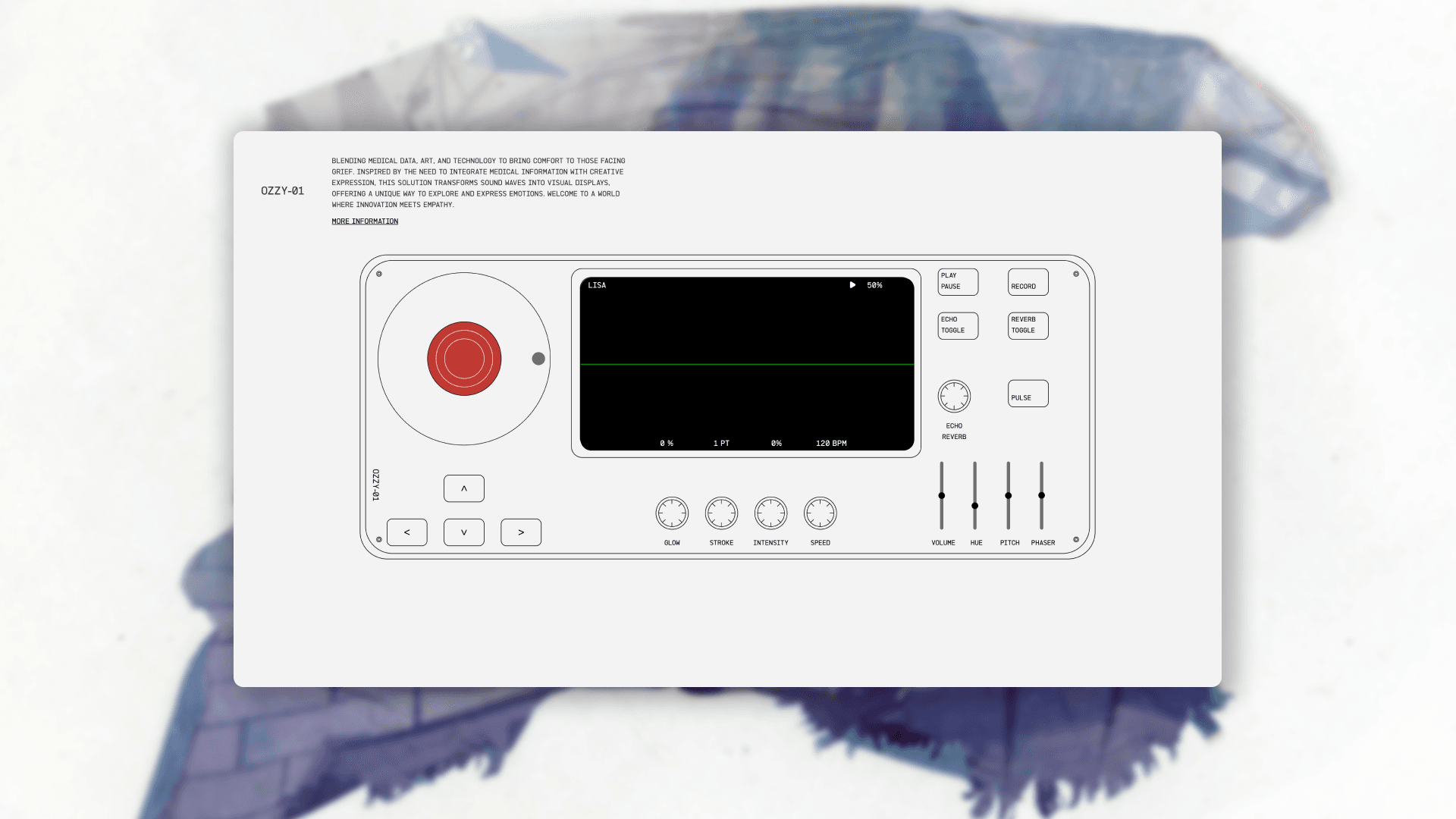

Ozzy-O1

2024

Client

Self-initiated project

Industry

Digital Experience & Interactive Media

Duration

3 months

Role

Designer and Developer

Services

UI/UX Design

Creative Coding

Frontend Development

Technologies

Next.js

GSAP

Figma

Overview

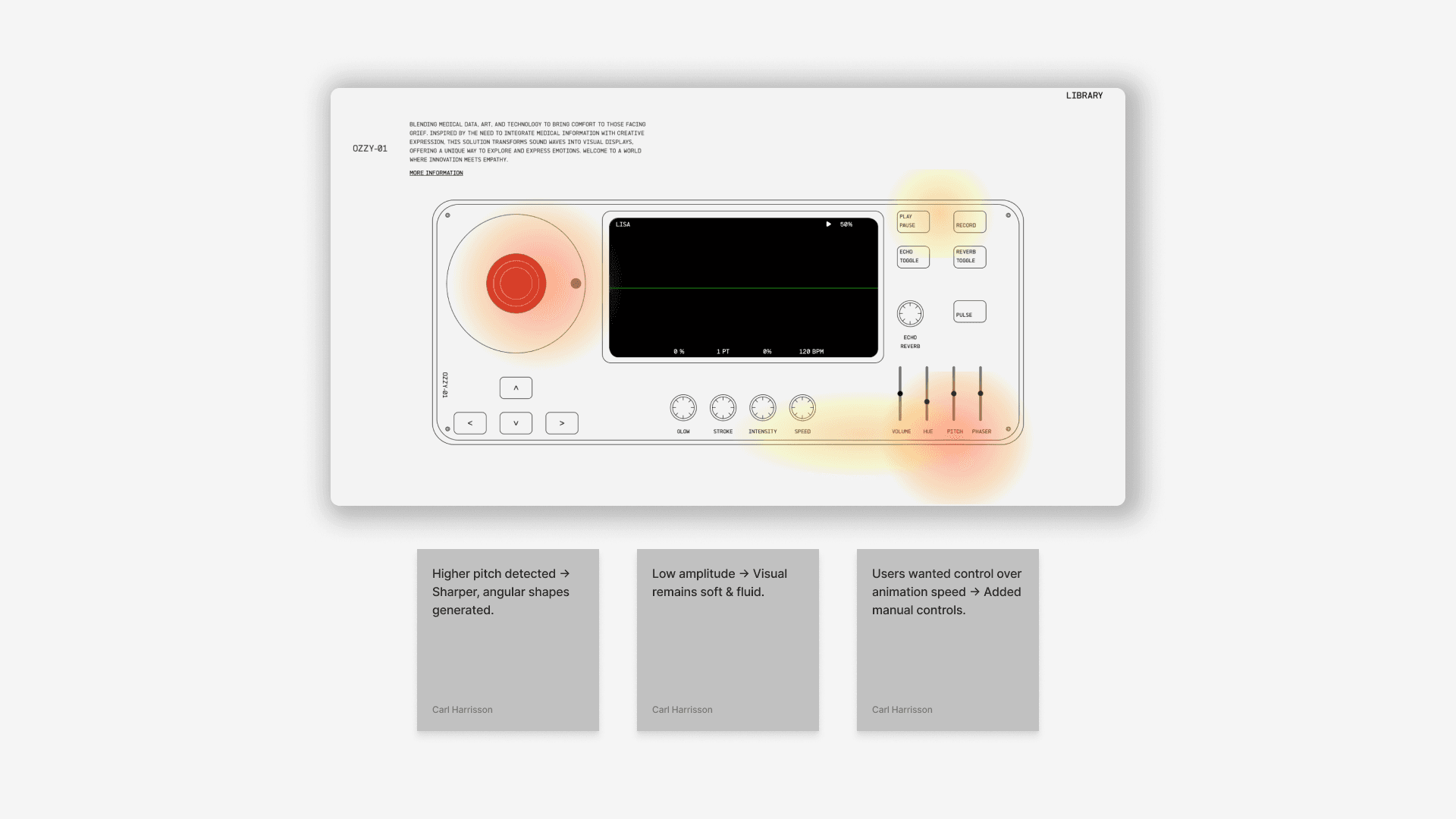

Traditional digital experiences lack personal emotional resonance. Ozzy-O1 bridges this gap by creating generative visuals from voice input, allowing users to interact with memories.

A visual representation of a voice recording transformed into generative patterns, capturing the emotional essence of sound.

Context

Built using Next.js and GSAP, Ozzy-O1 transforms voice recordings into dynamic visuals, offering an interactive way to engage with emotions and past experiences.

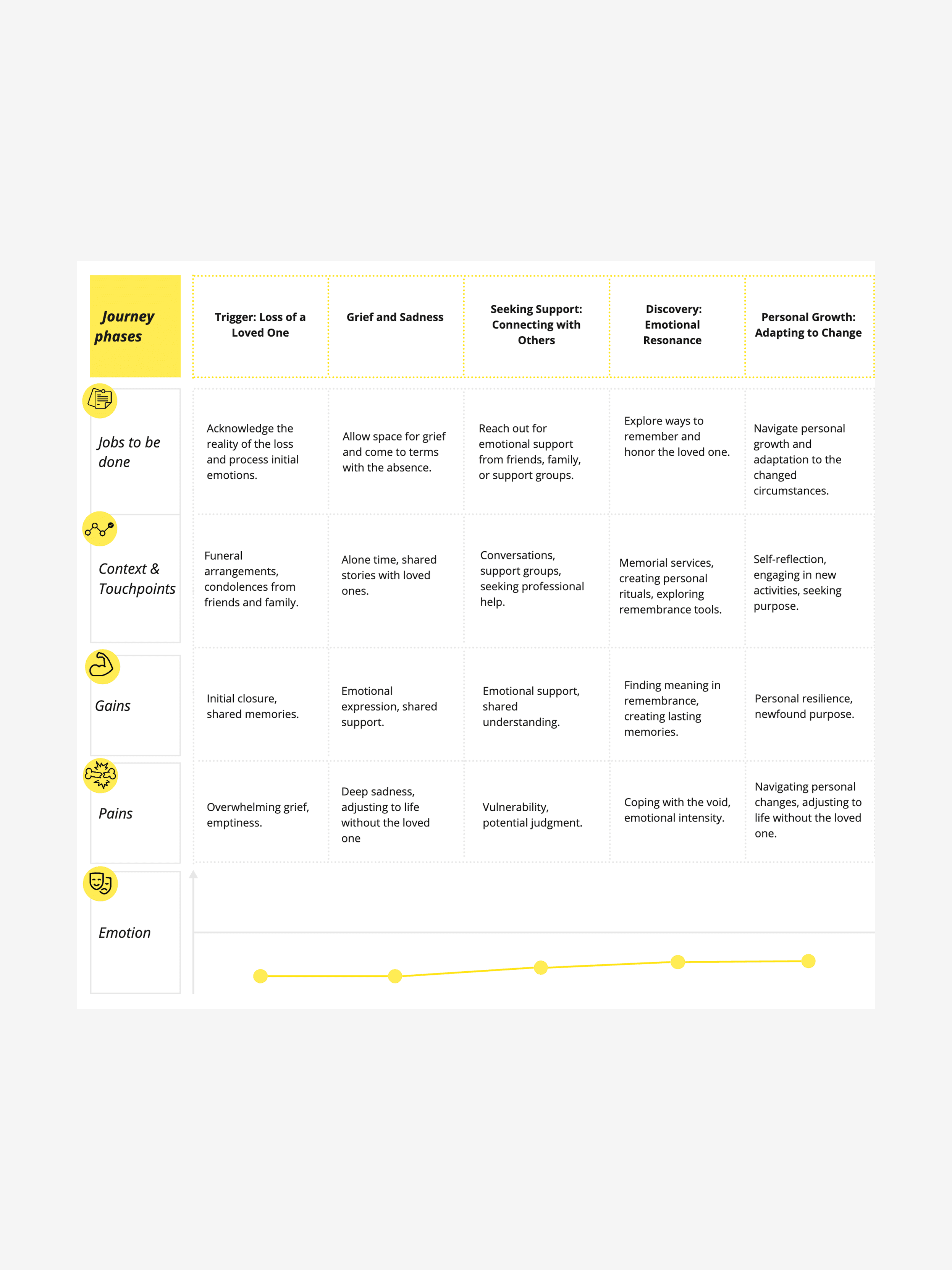

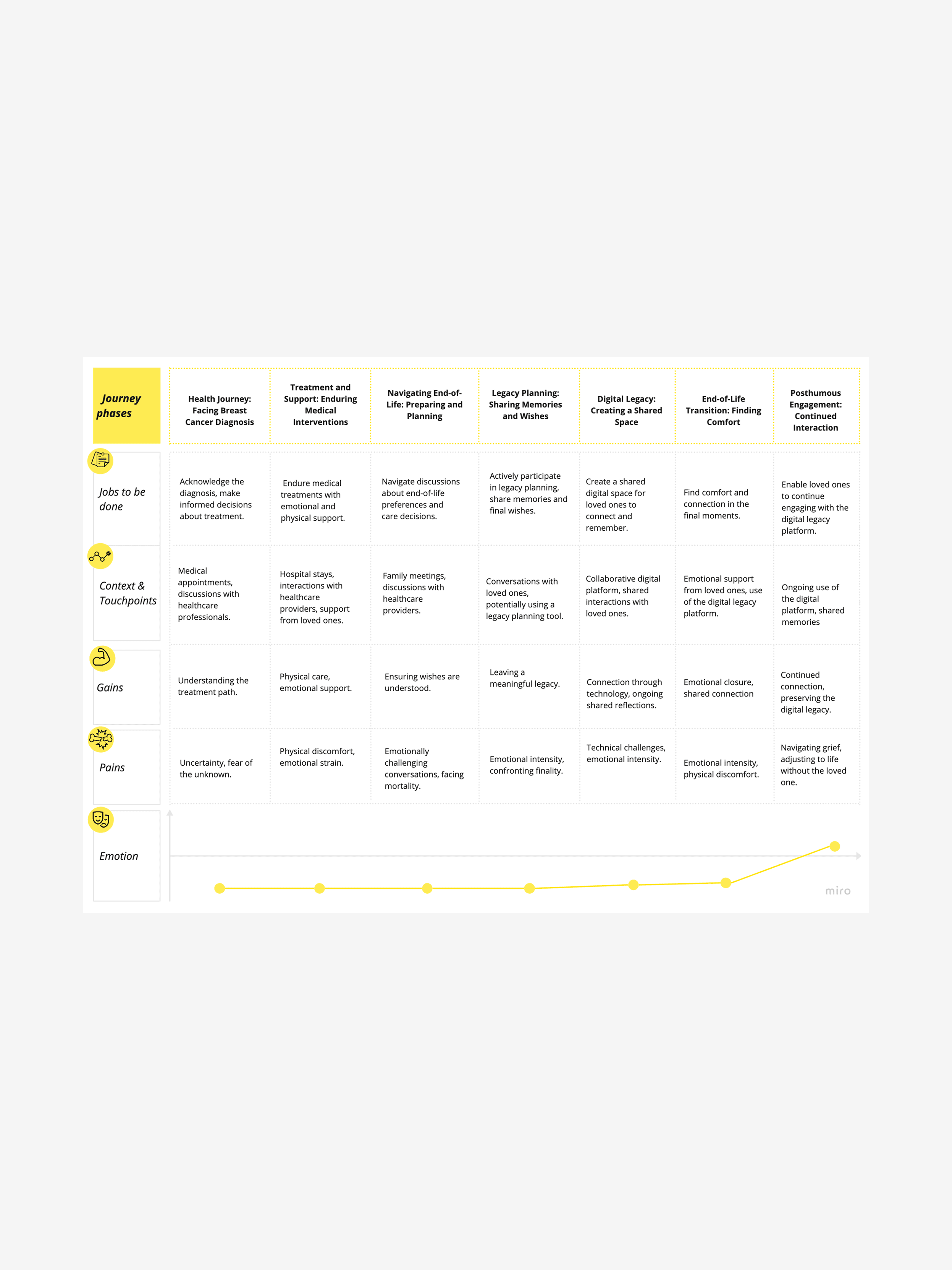

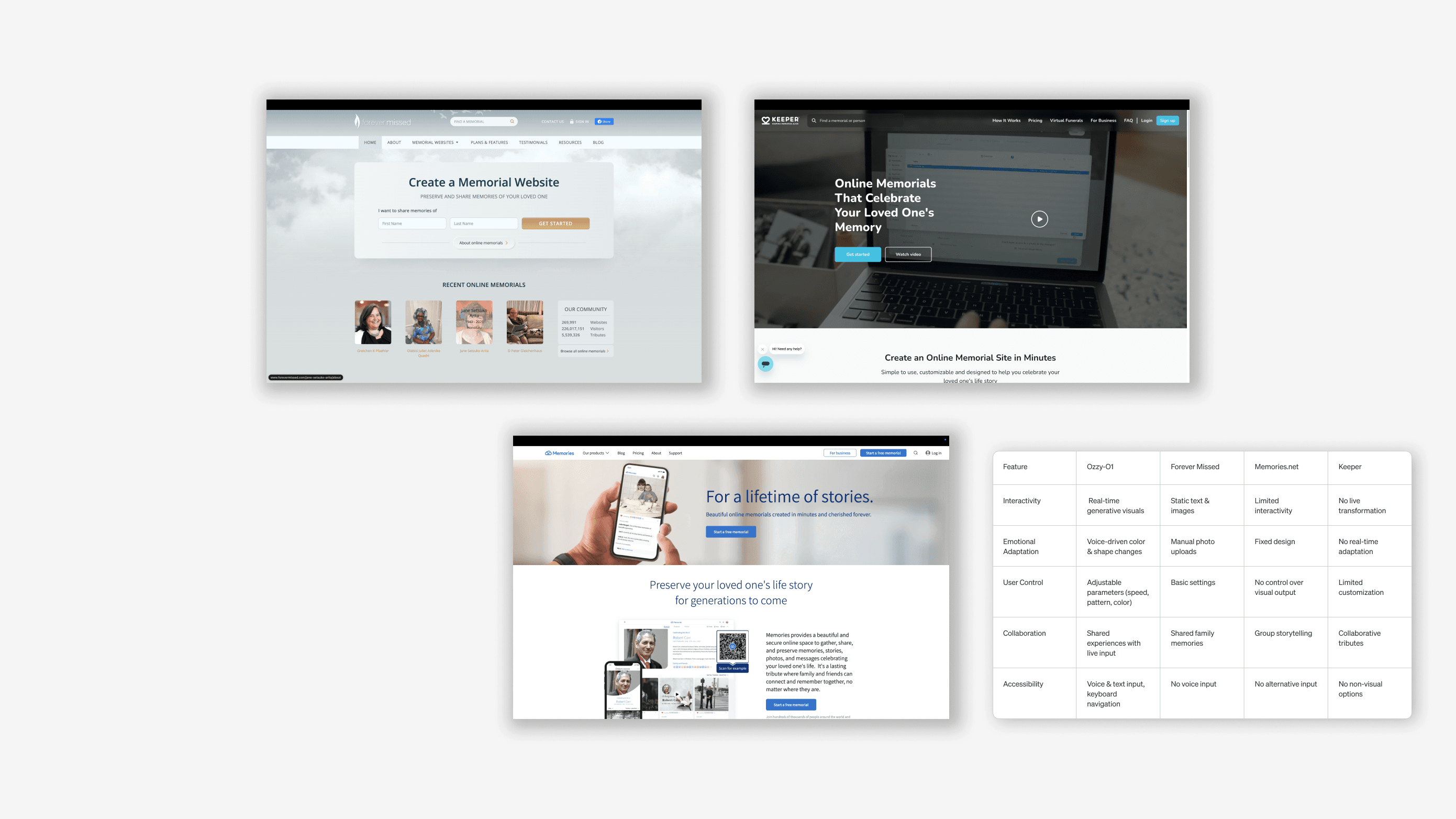

Research & Insights

Methodology

User Interviews

Conducted in-depth interviews to understand how users engage with digital memory experiences.

Usability Testing

Tested different animation flows and interaction models to improve engagement and ease of use.

Comparative Analysis

Reviewed existing digital memorialization tools to identify gaps and opportunities for improvement.

Technical Feasibility Study

Explored different WebGL and GSAP techniques for rendering smooth, interactive generative visuals in real time.

Comparative analysis breakdown.

Key Findings

Interactive Engagement

Users responded positively to the ability to see real-time generative visuals adapting to voice input.

Emotional Connection

The ability to engage with memories in a non-static format helped users feel more connected to past moments.

Performance Considerations

High-performance rendering was crucial for maintaining smooth interactions, especially on lower-powered devices.

Accessibility & Inclusivity

Users appreciated the need for alternative interaction methods, including text-based input and keyboard navigation.

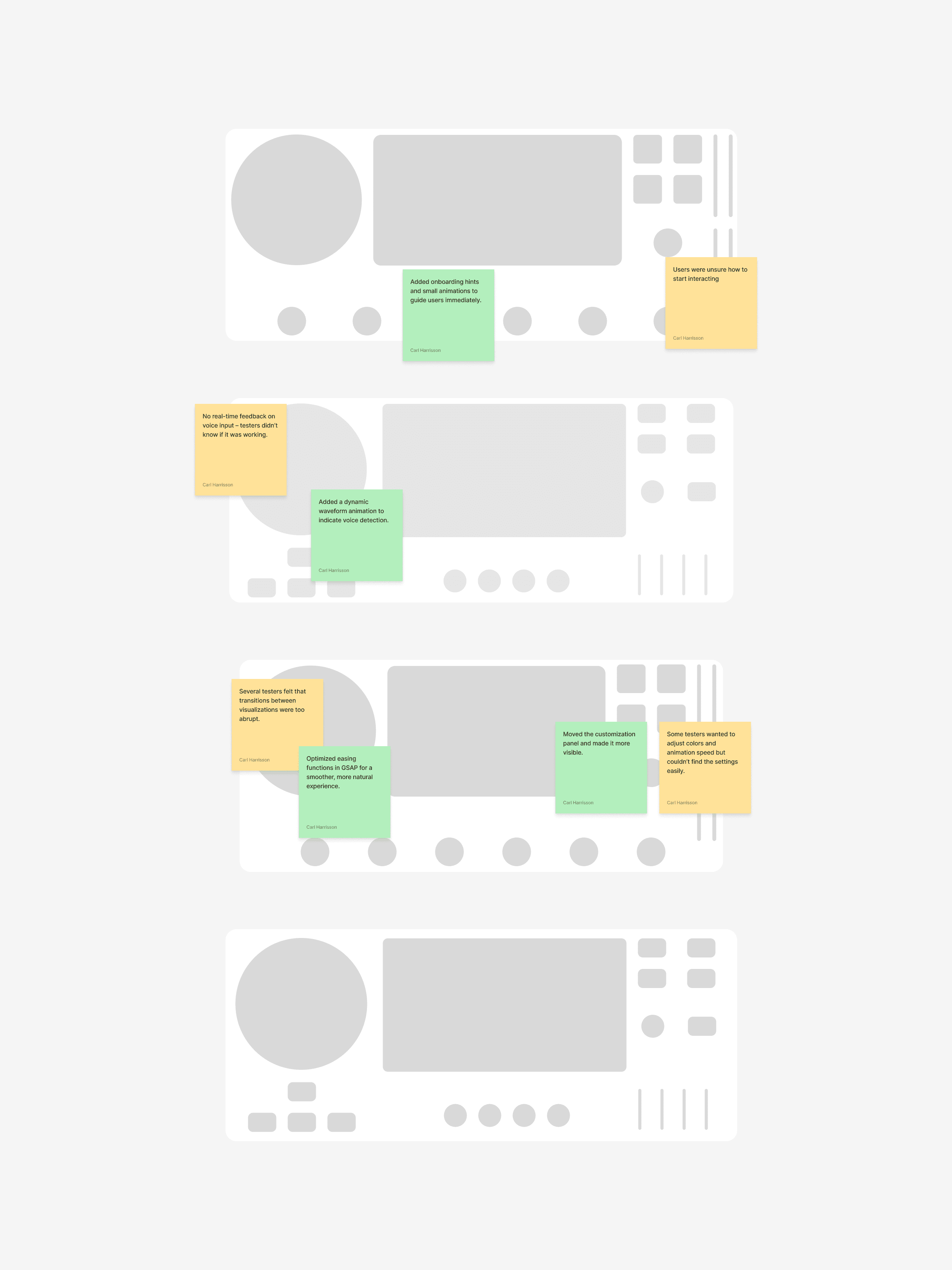

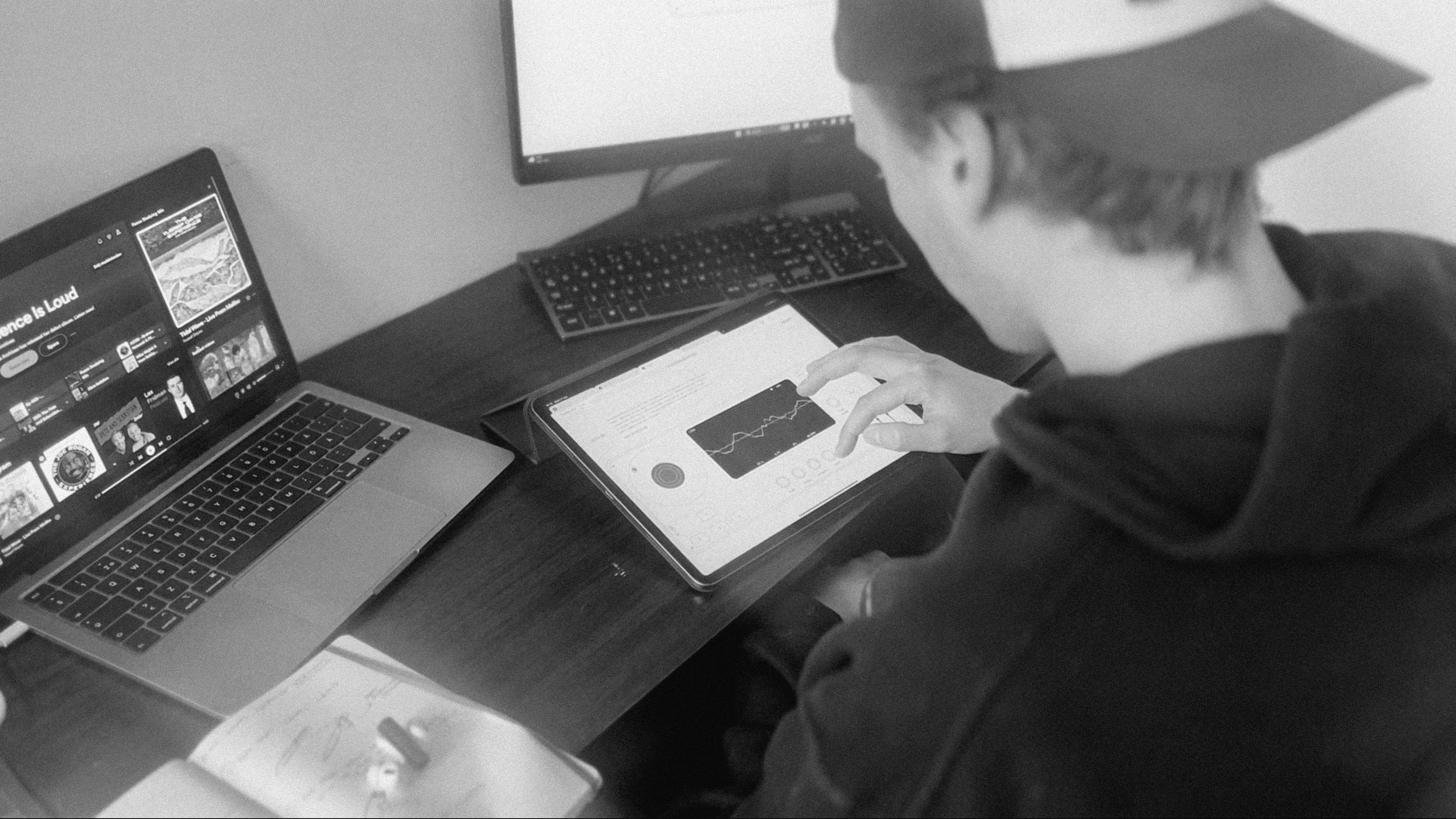

Testing & Iteration

User testing Ozzy-O1’s generative visuals for interaction and responsiveness

Usability Testing [20/20]

Conducted comprehensive usability testing with 20 participants across different user roles.

Key Findings

The animations are engaging, but some transitions felt too abrupt.

The customization options were well-received, but users wanted more control over the visual output.

The onboarding process needed more guidance to help users understand the interaction model.

Voice input detection worked well, but users wanted an option to upload pre-recorded audio files.

Refinements

Smoothed animation transitions using GSAP for better flow.

Added user-adjustable parameters for visual effects.

Improved onboarding with step-by-step instructions and visual cues.

Implemented audio file upload functionality for greater accessibility.

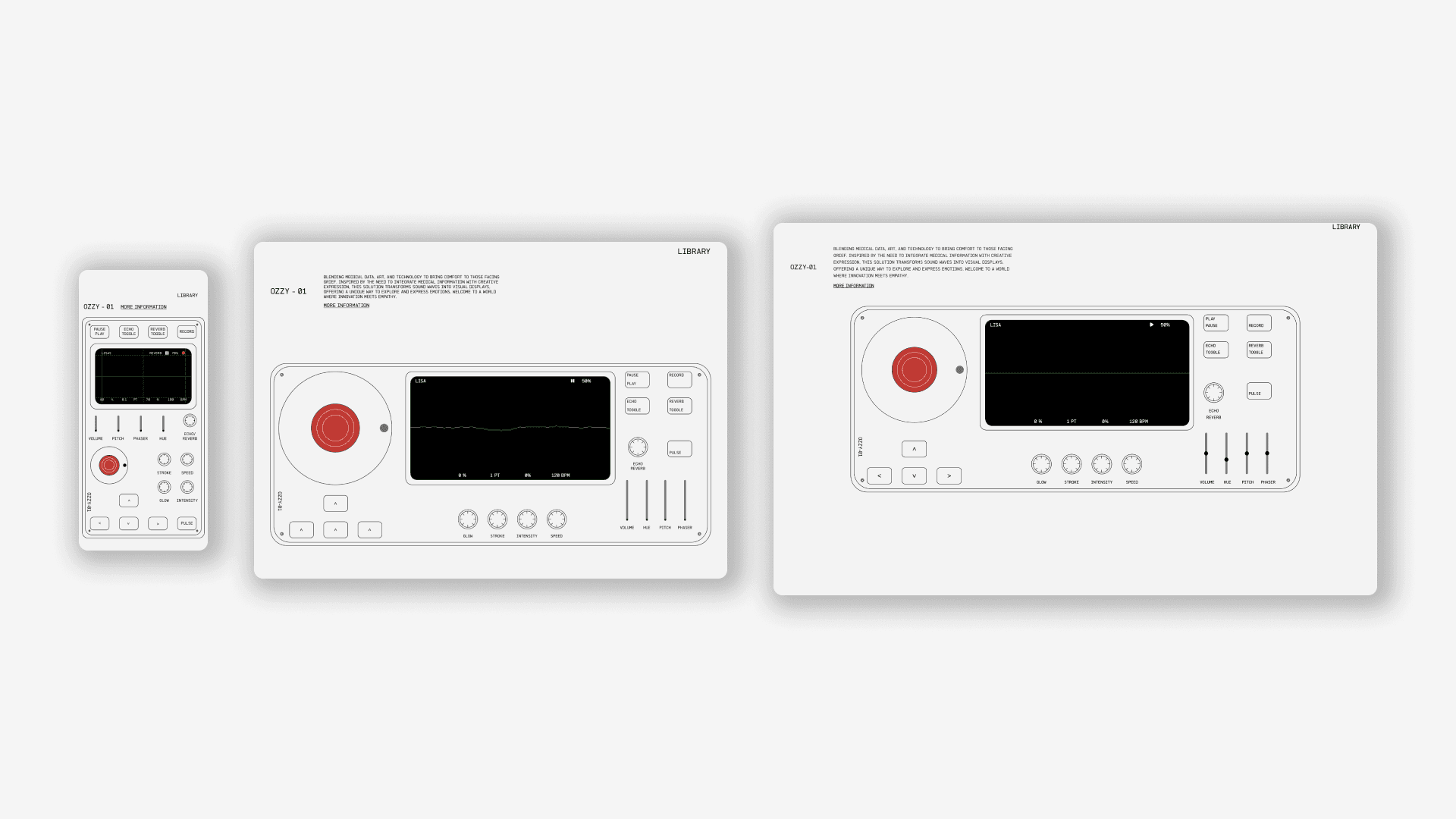

Process

Development: Ensuring seamless performance across desktop, tablet, and mobile.

Results

Stronger User Engagement

80% of testers found the interactive visual experience more engaging than traditional digital memory archives.

Performance Optimization

Optimized rendering improved animation smoothness by 35%, enhancing real-time feedback.

Improved Usability

Users found the final onboarding experience intuitive and easy to follow.

Lessons Learned

Next Steps

Expanded Customization

Introduce additional controls for users to personalize the generated visuals, such as color schemes, animation speed, and pattern complexity.

Enhanced Performance

Further optimize rendering efficiency to improve interaction speed, especially on lower-powered mobile devices.

Accessibility Improvements

Improve keyboard navigation, screen reader support, and text-based interaction options for a wider range of users.

Audio Input Expansion

Support additional audio formats and introduce waveform analysis to create more diverse generative patterns.